Define Your Vision and Mission

Conduct a SWOT Analysis

Set SMART Goals

Develop Strategic Objectives

Identify Key Strategies

Implement Action Plans

Allocate Resources

Monitor and Evaluate Progress

Communicate and Engage

…Art Entertainment and Media Management

The Intersection of Art, Entertainment, and Business

Art, entertainment, and media have long been intertwined, captivating audiences and shaping cultures. The management of these industries requires a unique blend of creativity, business acumen, and a deep understanding of consumer behavior. From the intricacies of film production to the complexities of digital marketing, art entertainment and media management encompasses a wide range of responsibilities.

Navigating the Evolving Landscape

The art entertainment and media landscape is constantly evolving, driven by technological advancements and shifting consumer preferences. The rise of streaming platforms, social media, and virtual reality has created new opportunities and challenges for industry professionals. Those who can adapt to these changes and embrace innovation will be well-positioned to succeed.

The Role of Creativity and Innovation

Creativity and innovation are essential components of art entertainment and media management. The ability to generate original ideas and concepts is crucial for creating engaging content that resonates with audiences. Additionally, embracing new technologies and experimenting with different formats can help to stay ahead of the curve and capture the attention of consumers.

Understanding Consumer Behavior

A deep understanding of consumer behavior is vital for success in art entertainment and media management. By studying audience preferences, demographics, and trends, professionals can tailor their content and marketing strategies to meet the needs of their target market. This knowledge can also be used to identify emerging opportunities and make informed decisions about investments.

The Importance of Business Acumen

In addition to creativity and innovation, business acumen is essential for managing art entertainment and media enterprises. This includes financial planning, budgeting, and resource allocation. Understanding the economics of the industry is crucial for making sound business decisions and ensuring the long-term sustainability of projects.

The Challenges of Intellectual Property

Protecting intellectual property is a major challenge in the art entertainment and media industry. Copyright infringement, piracy, and unauthorized distribution can have significant financial and reputational consequences. Effective legal strategies and digital rights management tools are essential for safeguarding creative works.

The Future of Art Entertainment and Media Management

The future of art entertainment and media management is filled with exciting possibilities. As technology continues to advance and consumer preferences evolve, new opportunities will arise for creative professionals and business leaders. By staying informed about industry trends, embracing innovation, and developing a strong understanding of consumer behavior, individuals can position themselves for success in this dynamic and ever-changing field.…

QuickBooks Customer Support

A Lifeline for Small Businesses

The Time I Needed Help (A Personal Story)

Multiple Ways to Get Help

What to Expect from QuickBooks Customer Support

Tips for Getting the Most Out of QuickBooks Customer Support

…

Properties in Al Reem Island, Abu Dhabi

Al Reem Island, situated off the northeastern coast of Abu Dhabi, is one of the emirate’s most prestigious residential and commercial hubs. Known for its luxurious high-rises, modern infrastructure, and vibrant community, Al Reem Island offers an exceptional living experience. This article explores the various properties in Al Reem Island, highlighting why it’s an ideal location for residents and investors alike.

Al Reem Island Overview

Al Reem Island is a natural island transformed into a bustling urban community. It features a mix of residential, commercial, and recreational spaces, making it a self-sufficient locality. The island is connected to Abu Dhabi city via multiple bridges, ensuring easy access to the mainland.

Residential Properties on Al Reem Island

Al Reem Island boasts a diverse range of residential properties, including apartments, villas, and townhouses. Some key residential areas include:

- Shams Abu Dhabi: A prominent district on Al Reem Island, offering a blend of high-rise apartments and low-rise villas. Shams Abu Dhabi is known for its waterfront views and luxurious living spaces.

- Marina Square: This area features a variety of residential towers with state-of-the-art amenities such as swimming pools, gyms, and children’s play areas. Marina Square is popular among expatriates and young professionals.

- City of Lights: Known for its futuristic design and modern architecture, the City of Lights offers a mix of residential, commercial, and retail spaces. It is an ideal location for those seeking a vibrant urban lifestyle.

Commercial Properties on Al Reem Island

Al Reem Island is not only a residential hub but also a thriving commercial center. The island hosts numerous office spaces, retail outlets, and business centers, making it an attractive location for businesses and investors.

- Boutik Mall: Located within the Sun & Sky Towers, Boutik Mall offers a variety of retail shops, dining options, and entertainment facilities, catering to the needs of residents and visitors alike.

- Office Towers: Al Reem Island features several high-rise office buildings equipped with modern facilities, providing an ideal environment for businesses of all sizes.

Amenities and Facilities

Al Reem Island offers a wide range of amenities and facilities to enhance the quality of life for its residents:

- Educational Institutions: The island is home to several reputed schools and nurseries, ensuring quality education for children.

- Healthcare: Residents have access to world-class healthcare facilities, including clinics and hospitals.

- Parks and Recreation: Al Reem Island features numerous parks, jogging tracks, and recreational areas, promoting a healthy and active lifestyle.

- Dining and Entertainment: The island offers a plethora of dining options, cafes, and entertainment venues, catering to diverse tastes and preferences.

Investment Opportunities

Investing in Al Reem Island properties offers numerous benefits:

- High Rental Yields: The island’s prime location and modern amenities make it a sought-after destination, resulting in high rental yields.

- Capital Appreciation: Properties on Al Reem Island have shown consistent appreciation in value, making them a sound investment choice.

- Diverse Portfolio: Investors can choose from a wide range of properties, from luxury apartments to commercial spaces, diversifying their investment portfolio.

Sustainable Living

Al Reem Island places a strong emphasis on sustainability and green living. Many buildings on the island are designed with energy-efficient systems and environmentally friendly materials, promoting a sustainable lifestyle for residents.

Conclusion

Al Reem Island stands out as a premier destination for both living and investing in Abu Dhabi. With its modern infrastructure, luxurious properties, and vibrant community, it offers an unparalleled living experience. Whether you are looking to rent or buy, Al Reem Island provides a wealth of opportunities to meet your needs.…

Why Choose Dubai for E-commerce Website Development?

Dubai has positioned itself as one of the best places for e-commerce businesses. Its strategic location connects East and West, making it ideal for international trade. The city also offers a high level of internet penetration and a growing number of online shoppers. Many businesses choose ecommerce website development in Dubai because it gives them access to a tech-savvy market with significant purchasing power.

Moreover, Dubai’s supportive government policies for businesses, such as tax-free zones and simplified regulatory frameworks, make it easier for entrepreneurs to start and grow their online ventures. With a booming economy and a diverse population, launching an e-commerce platform here opens up a wide range of opportunities for businesses.

Cryptocurrency Trends – Exploring the Latest Developments

Cryptocurrency, once considered a niche concept, has evolved into a dynamic and transformative force within the global financial landscape. As the technology underpinning cryptocurrencies continues to advance, new trends and developments emerge, shaping the trajectory of this decentralized financial revolution. This article explores the latest cryptocurrency trends, with a keen focus on the computing innovations that drive these advancements.

The Rise of Cryptocurrencies

Decentralization and Blockchain Technology

At the heart of cryptocurrencies is blockchain technology—a decentralized and distributed ledger that records transactions across a network of computers. This fundamental shift from centralized financial systems to decentralized, trustless networks has empowered cryptocurrencies to gain traction and disrupt traditional financial paradigms.

Early Pioneers: Bitcoin and Beyond

Bitcoin, introduced in 2009, marked the inception of cryptocurrencies and blockchain. Since then, a plethora of alternative cryptocurrencies, often referred to as altcoins, have been created, each with its unique features and use cases. Ethereum, launched in 2015, introduced smart contracts, enabling the creation of decentralized applications (DApps) and laying the foundation for a new era of blockchain innovation.

Computing the Trends: Key Developments in Cryptocurrency

1. DeFi – Decentralized Finance

Decentralized Finance, or DeFi, represents a paradigm shift in traditional financial services. Leveraging blockchain and smart contract technology, DeFi platforms aim to recreate and enhance traditional financial instruments such as lending, borrowing, and trading without the need for traditional intermediaries like banks. The computing power behind smart contracts ensures the automated execution of financial agreements, providing transparency and efficiency in the DeFi ecosystem.

2. NFTs – Non-Fungible Tokens

Non-Fungible Tokens (NFTs) have taken the art and entertainment world by storm. NFTs are unique digital assets representing ownership of specific items, often digital art, music, or collectibles. The computing innovation here lies in the use of blockchain to create scarcity and provenance for digital content. Artists and creators can tokenize their work, and buyers can secure ownership with cryptographic certainty, creating a new market for digital assets.

Computing’s Role in Enhancing Security

Cryptocurrency transactions rely on robust security measures, and computing plays a central role in fortifying the resilience of blockchain networks. The use of cryptographic techniques ensures the integrity and confidentiality of transactions, while consensus mechanisms, such as Proof of Work (PoW) and Proof of Stake (PoS), secure the network from malicious attacks. As the cryptocurrency landscape evolves, ongoing developments in cryptographic algorithms and network security are paramount.

Challenges and Considerations in Cryptocurrency Computing

1. Scalability

The scalability of blockchain networks is a persistent challenge. As the popularity of cryptocurrencies grows, the demand for faster and more scalable networks becomes essential. Various approaches, including layer 2 solutions, sharding, and consensus algorithm improvements, are being explored to address scalability concerns and enhance transaction throughput.

2. Energy Consumption

Proof of Work, the consensus mechanism used by Bitcoin and some other cryptocurrencies, has faced criticism for its energy-intensive nature. The computing power required for mining activities has led to concerns about the environmental impact. In response, alternative consensus mechanisms like Proof of Stake, which is more energy-efficient, are gaining traction as a sustainable solution for future blockchain networks.

Future Trends: Where Cryptocurrency and Computing Converge

1. Central Bank Digital Currencies (CBDCs)

Governments and central banks are exploring the concept of Central Bank Digital Currencies (CBDCs) as a digital representation of their national currency. The integration of CBDCs involves leveraging computing technologies to create secure and efficient digital payment systems. CBDCs aim to combine the benefits of cryptocurrencies, such as fast transactions and financial inclusion, with the stability and regulatory oversight of traditional fiat currencies.

2. Interoperability and Cross-Chain Solutions

As the number of blockchain networks and cryptocurrencies proliferates, the need for interoperability and cross-chain solutions becomes crucial. Computing innovations in this space aim to create seamless communication between disparate blockchain networks, enabling the transfer of assets and data across different platforms. Interoperability is a key trend that seeks to overcome the current siloed nature of blockchain ecosystems.

Conclusion: Navigating the Cryptocurrency Frontier with Computing

Cryptocurrencies are navigating uncharted territories, propelled by computing innovations that redefine the way we conceptualize and engage with financial systems. From DeFi transforming traditional finance to NFTs revolutionizing digital ownership, the computing power at the core of these trends is reshaping the future of finance and digital assets.

As we navigate the cryptocurrency frontier, the synergy between computing and blockchain technology will continue to unlock new possibilities. Addressing scalability, energy consumption, and fostering interoperability are challenges that the computing community is actively working to overcome. The convergence of cryptocurrency and computing represents a dynamic and ever-evolving landscape, where the future holds promises of enhanced security, sustainability, and innovation in the decentralized financial ecosystem.…

The Era of Wearable Tech – Integrating with Daily Life

Wearable technology, once confined to the realms of science fiction, has become an integral part of our daily lives, seamlessly blending fashion with function. From smartwatches and fitness trackers to augmented reality glasses, wearable tech has evolved rapidly, offering users a plethora of features beyond simple timekeeping or step counting. At the heart of this technological revolution is the sophisticated integration of computing technologies into compact, portable devices. This article explores the era of wearable tech, examining its diverse applications and the pivotal role of computing in bringing these innovations to life.

The Evolution of Wearable Technology

Beyond the Wristwatch

Wearable tech has transcended its origins as a mere accessory, becoming a dynamic field of innovation. While wristwatches with added functionalities marked the early stages, the contemporary landscape includes an array of devices that cater to various needs and preferences. Smart clothing, health monitoring devices, and even smart jewelry have proliferated, each leveraging computing technologies to enhance user experiences.

Computing Miniaturization

The miniaturization of computing components, driven by advancements in semiconductor technology, has been a game-changer for wearable devices. Smaller, more powerful processors, energy-efficient sensors, and compact yet high-resolution displays have paved the way for wearable tech that seamlessly integrates into our daily routines.

Computing Capabilities in Wearable Tech

1. Sensors and Data Collection

Wearable devices are equipped with an array of sensors that collect real-time data. Whether it’s heart rate monitoring, GPS tracking, or environmental sensing, these sensors provide valuable insights into various aspects of users’ lives. Computing technologies process and analyze this data, offering users actionable information to make informed decisions about their health, fitness, and overall well-being.

2. User Interface and Interaction

The user interface of wearable devices relies on computing technologies to deliver a seamless and intuitive experience. Touchscreens, voice recognition, and gesture controls are commonplace, providing users with convenient ways to interact with their devices. The integration of natural language processing and machine learning further enhances the responsiveness and adaptability of these interfaces.

Wearable Tech Applications in Daily Life

1. Health and Fitness Tracking

Wearable devices have revolutionized the way we approach health and fitness. From step counting to sleep tracking, these devices provide real-time feedback on various health metrics. Computing algorithms process this data, offering personalized insights and recommendations for users to maintain a healthy lifestyle.

2. Smartwatches and Notifications

Smartwatches have evolved beyond timekeeping to become sophisticated notification centers. Paired with smartphones, they deliver alerts for calls, messages, and app notifications directly to users’ wrists. The computing capabilities of these devices enable them to triage and display information in a concise and user-friendly manner.

3. Augmented Reality (AR) Glasses

AR glasses overlay digital information onto the user’s physical surroundings, creating immersive experiences. Computing technologies, including advanced optics and real-time processing, are crucial for delivering seamless AR interactions. Applications range from navigation assistance to hands-free communication and enhanced productivity in various industries.

Computing Challenges and Innovations

1. Battery Life Optimization

The compact form factor of wearable devices limits the size of batteries they can accommodate. Optimizing battery life while maintaining computing performance is a constant challenge. Advances in energy-efficient processors and low-power display technologies contribute to prolonged battery longevity in modern wearables.

2. Data Security and Privacy

Wearable devices collect sensitive personal data, raising concerns about security and privacy. Computing solutions must implement robust encryption, secure data storage, and stringent privacy controls to protect users’ information from unauthorized access or potential breaches.

Future Trends: Computing Horizons in Wearable Tech

1. Health Monitoring and Medical Applications

The future of wearable tech holds immense potential for advancing health monitoring and medical applications. Wearable devices could continuously monitor vital signs, detect early signs of medical conditions, and even administer personalized treatments. Computing technologies will play a central role in the development of these life-saving innovations.

2. Integration with Smart Environments

Wearable devices are poised to become integral components of smart environments. Interconnected with other IoT (Internet of Things) devices and smart home systems, wearables could seamlessly control and monitor various aspects of our surroundings. Computing technologies will orchestrate these interactions, creating a cohesive and intelligent ecosystem.

Computing’s Role in Fashion and Function

1. Design Aesthetics

The marriage of fashion and technology in wearable devices is a delicate balance. Computing technologies enable designers to create sleek, stylish devices without compromising functionality. The integration of displays, sensors, and other components requires a deep understanding of both design principles and technological constraints.

2. Customization and Personalization

Wearable devices are increasingly designed to cater to individual preferences and needs. Computing algorithms analyze user data to offer personalized recommendations, adaptive settings, and tailored user experiences. This level of customization enhances user satisfaction and engagement with wearable tech.…

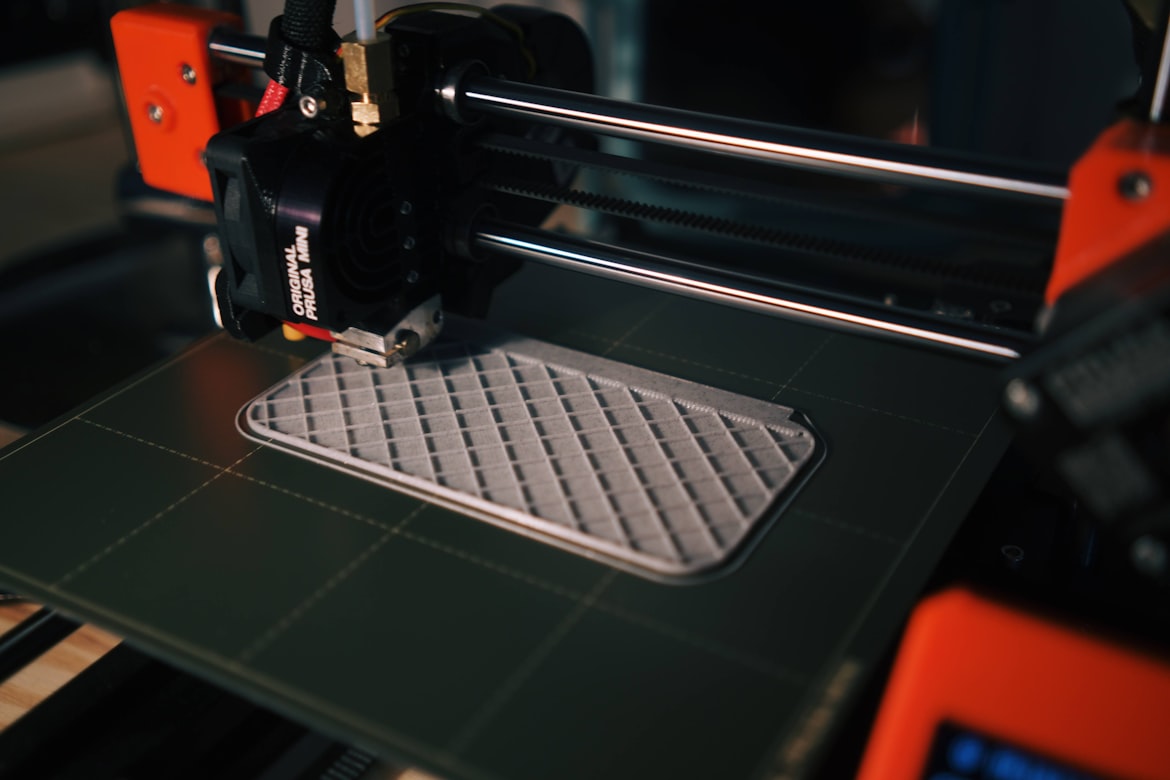

3D Printing Revolution – From Prototypes to Prosthetics

The advent of 3D printing has ushered in a revolutionary era where the physical world can be digitally manifested with unprecedented precision and creativity. Originally conceived as a rapid prototyping technology, 3D printing, also known as additive manufacturing, has transcended its initial purpose. Today, it has permeated various industries, from manufacturing and healthcare to aerospace and art. At the heart of this transformative journey lies the integral role of computing technologies. This article explores the 3D printing revolution, highlighting its diverse applications and the indispensable contribution of computing in pushing the boundaries of what is possible.

The Genesis of 3D Printing

Prototyping and Beyond

The roots of 3D printing trace back to the 1980s when it was primarily utilized for rapid prototyping in industrial settings. This groundbreaking technology allowed engineers and designers to translate digital models into physical prototypes swiftly. The early stages of 3D printing were marked by limited materials and relatively simple designs.

Computing Catalyst

As computing power increased, so did the capabilities of 3D printing. The intricate algorithms and sophisticated software required to precisely control the layer-by-layer deposition of materials became feasible with advancements in computing technologies. This convergence paved the way for 3D printing to evolve from a niche prototyping tool to a disruptive force across diverse industries.

Computing in 3D Printing Processes

1. Digital Modeling and Design

The journey of a 3D-printed object begins in the digital realm. Complex computer-aided design (CAD) software allows designers to create intricate and detailed 3D models. These digital blueprints serve as the foundation for the entire 3D printing process. The efficiency and precision of modern CAD software, powered by robust computing resources, enable the creation of highly sophisticated and customized designs.

2. Slicing Algorithms

Once a digital model is created, slicing algorithms come into play. Slicing involves breaking down the 3D model into numerous thin layers, akin to the layers of a cake. Each layer represents a cross-section of the object, and these layers guide the 3D printer in the additive manufacturing process. Computing technologies ensure that the slicing process is accurate and optimized for the specific 3D printer being used.

Diverse Applications of 3D Printing

1. Manufacturing and Prototyping

While rapid prototyping remains a cornerstone of 3D printing, its applications in manufacturing have expanded significantly. Industries can now produce intricate components, customize products, and iterate designs rapidly. The ability to create prototypes on-demand accelerates product development cycles and reduces costs.

2. Medical Breakthroughs

One of the most profound impacts of 3D printing is witnessed in the field of healthcare. From patient-specific implants to prosthetics and even functional organs, 3D printing has revolutionized medical treatments. Computing technologies contribute to the precision required for creating personalized medical devices and organs, enhancing patient care and outcomes.

Computing Challenges in 3D Printing

1. Optimizing Print Parameters

The success of a 3D print relies on optimizing various parameters, including layer thickness, printing speed, and temperature control. Computing technologies aid in simulating and analyzing these parameters to achieve the desired print quality. Continuous improvements in algorithms contribute to the efficiency of the printing process.

2. Material Science and Simulation

The variety of materials used in 3D printing, from plastics and metals to bioinks, demands a nuanced understanding of material science. Computing technologies enable simulations that predict the behavior of materials during the printing process, ensuring the structural integrity and functionality of the final product.

Future Trends: Computing Horizons in 3D Printing

1. Multi-Material and Multi-Modal Printing

The future of 3D printing lies in the realm of multi-material and multi-modal capabilities. Advances in computing will play a pivotal role in orchestrating the printing of complex objects with different materials and properties. This opens avenues for creating integrated products with diverse functionalities in a single print run.

2. Generative Design and AI Integration

Generative design, powered by artificial intelligence (AI), is a promising trend in 3D printing. AI algorithms can explore countless design possibilities and optimize structures based on specific criteria. The integration of AI with 3D printing processes will usher in a new era of efficiency and creativity in design and manufacturing.

Computing’s Role in Accessibility and Innovation

1. Open-Source Communities

The accessibility of 3D printing owes much to open-source communities that share designs, software, and knowledge freely. Computing technologies facilitate collaboration within these communities, fostering innovation and democratizing the tools required for 3D printing. This collaborative spirit accelerates the evolution of the technology.

2. Educational Initiatives

3D printing is becoming an integral part of educational curricula, providing students with hands-on experience in design and manufacturing. Computing technologies enable educational initiatives by providing software tools and resources that empower students to explore their creativity and understand the principles of additive manufacturing.

Conclusion: Computing the Fabric of Innovation

The 3D printing revolution is a testament to the symbiotic relationship between computing technologies and human ingenuity. From …

Biometric Security – Unlocking the Future with Your Identity

In the ever-evolving landscape of digital security, traditional methods of password protection and PINs are being augmented, if not replaced, by more advanced and secure authentication methods. At the forefront of this evolution is biometric security, a technological marvel that utilizes unique physical or behavioral traits to grant access. As we delve into the era of biometric security, it becomes evident that computing technologies play a pivotal role in shaping the future of identity verification.

The Rise of Biometric Security

A Paradigm Shift

Biometric security leverages distinctive biological or behavioral characteristics to verify an individual’s identity. From fingerprint and facial recognition to iris and voice scans, biometrics provide a highly secure and convenient means of authentication. This paradigm shift in security is fueled by advancements in computing technologies that enable the efficient processing and analysis of biometric data.

The Computing Foundation

At the heart of biometric security lies the computing power that processes, stores, and matches vast datasets associated with individuals’ unique biometric traits. The algorithms and models used for biometric recognition require robust computing infrastructure to deliver accurate and near-instantaneous results.

Key Biometric Technologies

1. Fingerprint Recognition

Fingerprint recognition is one of the oldest and most widely adopted biometric technologies. Computing algorithms analyze the unique patterns of ridges and valleys on an individual’s fingertip to create a digital representation, known as a fingerprint template, for authentication purposes.

2. Facial Recognition

Facial recognition technology captures and analyzes facial features to identify individuals. The computing algorithms behind facial recognition map key facial landmarks and create a unique template. This technology is commonly used in smartphones, security systems, and even airport checkpoints.

Computing Challenges in Biometric Security

1. Accuracy and False Positives

Achieving high accuracy in biometric recognition is critical to the reliability of security systems. Computing technologies must continually improve algorithms to minimize false positives and negatives, ensuring that unauthorized individuals are not granted access while legitimate users are not wrongly denied.

2. Data Security and Privacy Concerns

The sensitive nature of biometric data raises significant concerns regarding data security and privacy. Computing solutions for biometric security must implement robust encryption methods, secure storage protocols, and adhere to strict privacy regulations to safeguard individuals’ biometric information from unauthorized access or malicious use.

The Future: Advancements in Biometric Security

1. Behavioral Biometrics

Beyond physical characteristics, behavioral biometrics are gaining prominence. This involves analyzing unique patterns in an individual’s behavior, such as typing speed, mouse movements, or even gait. Advanced computing technologies enable the real-time analysis of these behavioral traits for continuous and unobtrusive authentication.

2. Multi-Modal Biometrics

To enhance security and reliability, multi-modal biometrics combine multiple biometric identifiers. This can involve using a combination of fingerprints, facial recognition, and voiceprints for a more robust authentication process. Computing technologies facilitate the integration and analysis of data from diverse biometric sources.

The Role of Computing in Biometric Security Implementation

1. Processing Speed and Real-Time Authentication

The efficiency of biometric security systems heavily relies on the processing speed of computing technologies. Real-time authentication, especially in scenarios like airport security or financial transactions, demands powerful computing systems capable of rapid and accurate biometric matching.

2. Database Management and Matching Algorithms

Biometric databases require sophisticated management systems to store, retrieve, and match biometric templates efficiently. Computing algorithms play a crucial role in comparing stored templates with real-time biometric data, ensuring a seamless and secure authentication process.

Computing Trust: Overcoming Biometric Challenges

1. Anti-Spoofing Technologies

Biometric security must guard against spoofing attempts, where malicious actors try to trick the system using fake fingerprints, masks, or other imitation methods. Advanced anti-spoofing technologies, powered by computing solutions, continuously evolve to detect and prevent these fraudulent activities.

2. Continuous Monitoring and Adaptability

Continuous monitoring is vital in biometric security to detect anomalies or changes in an individual’s biometric patterns. Computing technologies enable adaptive systems that can learn and adjust over time, ensuring that the security measures stay effective even as individuals’ biometric traits naturally evolve.

Conclusion: The Future Unlocked with Biometric Security and Computing

Biometric security, propelled by the relentless advancements in computing technologies, is revolutionizing the way we authenticate and verify identities. As we move toward a future where passwords may become obsolete, biometrics offer a secure and convenient alternative. The synergy between biometric technologies and computing is unlocking new frontiers in security, providing individuals and organizations with powerful tools to protect sensitive information and secure access to digital systems.

While challenges such as accuracy, privacy concerns, and evolving threats persist, the continuous evolution of computing technologies ensures that biometric security remains at the forefront of identity verification. As computing power and algorithms become more sophisticated, the future promises not only enhanced security but also a seamless and user-friendly experience, marking a paradigm shift in the way we interact with the digital world.…

Drones in Action – From Hobby to High-Tech Applications

Drones, once relegated to the realm of hobbyists and enthusiasts, have rapidly ascended to become versatile tools with applications spanning various industries. From aerial photography and recreational flying to complex high-tech missions, drones are now an integral part of our technological landscape. This article explores the evolution of drones, examining their journey from a hobbyist’s pastime to high-tech applications, with a focus on the computing technologies that have propelled their capabilities to new heights.

The Rise of Drones as a Hobby

Early Days and Recreational Soaring

Drones, also known as Unmanned Aerial Vehicles (UAVs) or Unmanned Aircraft Systems (UAS), initially gained popularity as recreational gadgets. Enthusiasts were drawn to the thrill of flying these unmanned devices, capturing breathtaking aerial views and experimenting with aerial maneuvers. The advent of affordable consumer drones equipped with cameras marked a turning point, turning drone flying into a widespread hobby.

The Role of Computing in Hobbyist Drones

Even in their early stages, computing technologies played a pivotal role in hobbyist drones. Onboard flight controllers, equipped with sensors like accelerometers and gyroscopes, enabled drones to maintain stability and respond to user inputs. These computing components laid the foundation for the intuitive flight experiences enjoyed by hobbyist drone pilots.

Transformative Applications: Drones Go Pro

1. Aerial Photography and Filmmaking

The integration of high-quality cameras into drones opened up new possibilities in aerial photography and filmmaking. Drones equipped with advanced imaging capabilities became powerful tools for capturing stunning visuals from vantage points that were previously inaccessible. The computing technologies behind camera stabilization systems, automated flight modes, and real-time image processing contributed to the rise of drones as indispensable tools for photographers and filmmakers.

2. Precision Agriculture

Drones found practical applications in agriculture, where they revolutionized precision farming. Equipped with sensors and cameras, agricultural drones can survey large fields, analyze crop health, and provide farmers with valuable insights. Computing technologies enable these drones to process data in real-time, offering actionable information on irrigation, pest control, and crop management.

Computing Power Takes Drones to New Heights

1. Advanced Flight Control Systems

Modern drones rely on sophisticated flight control systems powered by advanced computing algorithms. These systems not only ensure stable flight but also enable autonomous features such as waypoint navigation, follow-me modes, and obstacle avoidance. The evolution of these computing components has made drones more user-friendly and accessible to a broader audience.

2. Computer Vision and Object Recognition

The integration of computer vision and object recognition technologies has enhanced the capabilities of drones in various applications. Drones equipped with cameras and AI algorithms can identify and track objects, people, and even specific features of the environment. This is particularly valuable in search and rescue missions, surveillance, and environmental monitoring.

Drones in High-Tech Applications

1. Delivery Services and Logistics

Drones are making waves in the logistics industry, promising faster and more efficient delivery services. Companies are exploring the use of drones to transport small packages and medical supplies to remote or inaccessible areas. The computing technologies that enable precise navigation, route optimization, and obstacle avoidance are critical in making drone deliveries a reality.

2. Environmental Monitoring and Conservation

Drones equipped with specialized sensors are becoming invaluable tools for environmental monitoring and conservation efforts. They can survey vast areas, track wildlife, and monitor changes in ecosystems. The computing infrastructure behind data processing and analysis contributes to the accuracy and efficiency of these environmental missions.

Challenges and Considerations in High-Tech Drone Applications

1. Regulatory Frameworks

The widespread adoption of drones in high-tech applications has led to the development of regulatory frameworks to ensure safe and responsible drone use. Governments and aviation authorities are working to strike a balance between fostering innovation and addressing concerns related to airspace safety, privacy, and security.

2. Battery Technology and Flight Endurance

Despite advancements in computing, battery technology remains a limiting factor in drone flight endurance. High-tech applications often require extended flight times, and researchers are actively working on developing more efficient and lightweight batteries to address this challenge.

Future Trends: Computing Horizons for Drones

1. Swarm Intelligence

The concept of drone swarms, where multiple drones collaborate and communicate to achieve a common goal, is gaining traction. Swarm intelligence leverages advanced computing algorithms to enable coordinated and efficient group behavior. This has applications in various fields, from search and rescue missions to agricultural tasks.

2. 5G Connectivity

The integration of 5G connectivity is set to revolutionize drone capabilities. High-speed, low-latency communication facilitated by 5G networks enables real-time data transmission, enhancing the responsiveness of drones in applications such as live streaming, remote operation, and collaborative missions.

Conclusion: Soaring with Computing Wings

From humble beginnings as a hobbyist’s delight to high-tech applications that redefine industries, drones have soared to new heights, guided by the wings of computing technologies. The evolution of drones showcases the transformative impact that computing has had …